Introduction

Sentiment and emotion analysis are critical tools in knowledge aggregation and interfacing with people. As we move from the industrial age, where wealth is measured in capital, into the information age, Barbara Endicott-Popovsky suggests that knowledge will be the new measure of wealth [1]. According to Addleson, knowledge management typically takes two approaches, either focus on people as knowledge workers or on the tools and data [2]. With the rapid development of neural networks, these two knowledge management foci can merge as machines become the knowledge workers. As machines take on the role of knowledge workers, there will be an increased need for machines to recognize emotion as well as sentiment. Current state of the art methods for machines to distinguish sentiment and emotions utilize artificial neural networks. This article will discuss artificial neural networks and how they are used in emotion and sentiment analysis, as well as a look into how these technologies can allow machines to be a more integral part of knowledge management and the cyber domain.

In this article, the use of sentiment analysis is based on Scherer’s typology of affective states [3, 4, 5]. According to Scherer, sentiment analysis focuses on attitudes, which are enduring beliefs towards objects or persons. Due to the enduring nature of sentiment, written views are a common source for this analysis. Following Scherer’s typology, emotion is considered a brief organically synchronized event; thus, emotion analysis is highly temporal and triggered by any and all stimuli. In terms of emotional analysis and detection, the focus will be on the seven universal emotions identified by Ekman [6]; joy, surprise, fear, anger, sadness, disgust, and contempt [6]. The data used as the basis for emotional analysis, as discussed in this article, focuses on images or video capturing a specific emotion in time. Analysis of these two affective states requires different approaches due to the medium by which they are conveyed.

Background

Modern sentiment and emotion analysis are built on decades of psychological research. Natural Language Processing (NLP) is critical for sentiment analysis based on the use of statistics as discussed by Manning and Schütze [7]. With an understanding of word usage frequency, various methods can be used to assign a sentiment by sentence, paragraph, or even larger portions of text. Supervised and unsupervised sentiment analysis are the two approaches used. These methods typically utilize a sentiment lexicon coupled with some machine learning algorithm like the following exemplars: bagging, K-Means, support vector machine or naive Bayes classifiers and/or some form of a hybrid [8, 9, 10, 11].

Just as NLP is a critical stepping stone for work in sentiment analysis, computer vision is critical to the area of automated emotion detection [12, 13]. Ekman [14] devised the Facial Action Coding System (FACS), which mapped facial muscles known as, or Action Units (AU), and combinations of AU to the facial expressions related to the seven universal emotions. Various methods have been used to automate emotion detection from video and images, as well as other factors, like attention. Initially, basic facial landmark methods were used to establish an AU, and from there, a probability of the associated emotion was calculated [12, 15]. While the trend for emotion detection is moving towards artificial neural networks, the field is still young. There has been considerable exploration of the other methods for emotion detection from images and video. These other methods typically leverage computer vision techniques like Histogram of Oriented Gradients (HOG), Histogram of Image Gradient Orientation (HIGO), Histograms of Optical Flow (HOF), Local Binary Patterns (LBP) coupled with Support Vector Machines (SVM) or support vector regression (SVR) [16, 17].

Current Technologies

Artificial Neural Networks

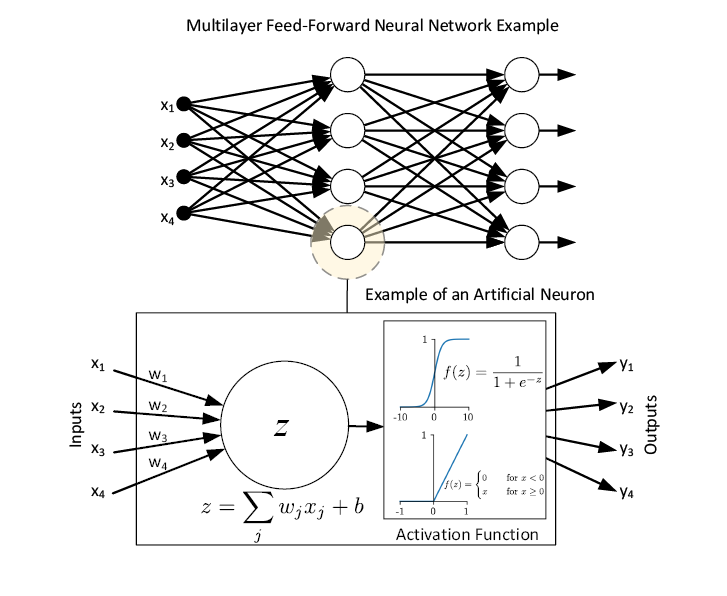

The computational model for an artificial neural network was proposed in 1943 by McCulloch and Pitts [18], when trying to understand how cats and monkeys process information from the eyes. Computational limitations greatly hampered widespread use and simpler machine learning methods such as SVMs. The increase of computational power and the need for more sophisticated machine learning solutions that are less sensitive to noise has resulted in a resurgence of interest in artificial neural networks.

The promise of Artificial Neural Networks (ANN) is to move beyond the von Neumann computer architecture [19]. The von Neumann approach has resulted in computers that can outperform people in the numeric domain. However, there is a need for algorithms that can learn and adapt in order to solve new problems such as sentiment analysis and emotion detection.

Figure 1. Basic structure of a neuron [21] – Source: Author

The axon will branch in order to connect to multiple other neurons. The connection from the axon to the dendrite of another neuron is called a synapse. The speed (much slower than electrical signals) that the signals travel in a neural network, when compared to time it takes for a response to stimuli, suggests that signal processing takes less than 100 stages [19].

Figure 2. Example of a multilayer FNN [19] – Source: Author

Figure 3: Simple recurrent neural network – Source: Author

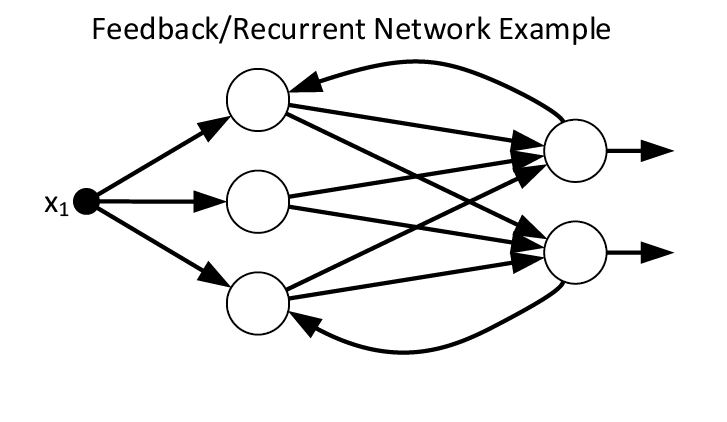

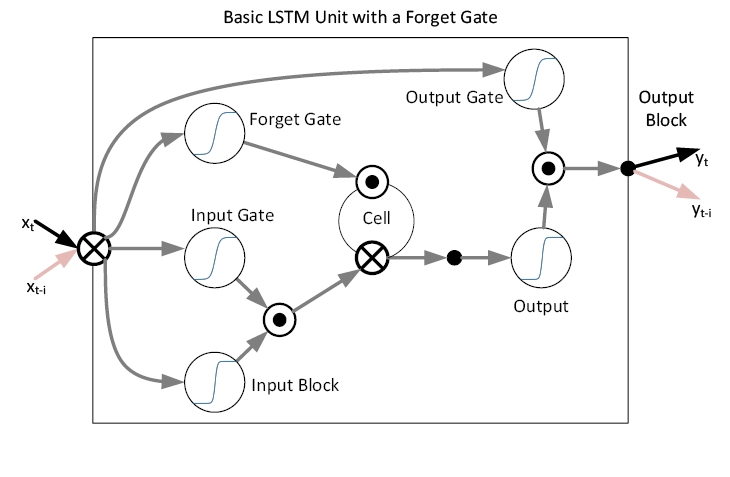

The feed-forward ANN, once trained, can be deployed and will not adapt or continue learning. The second major type of ANN is the Recurrent or Feedback Neural Network (R/FNN). This is illustrated in Figure 3 which shows a basic ANN with feedback. These types of networks continue to learn to adapt to changes, the complication being that training is slow and can stop if the gradient goes to zero [22]. To address this, a long short-term memory unit (LSTM) was proposed. LSTM maintains a constant error along with the ability to forget and reset its state [23, 24, 25]. A long short-term memory LSTM units can continue to learn over 1,000 time steps. Figure 4 is an illustration of an LSTM unit. This unit uses weighted inputs summed with past inputs, which are then sent to an input activation function and activation functions that make up the input, output, and forget gates. The activation functions are typically sigmoid or tanh. The center contains the cell, which stores a continuous error of one multiplied by the output of the forget gate. The result of this multiplication is summed with the product of the input and input gate. The sum from the forget and input products goes to an output activation function, which is multiplied by the output gate.

Figure 4. Illustration of an LSTM unit used in RNN networks [24, 25] – Source: Author

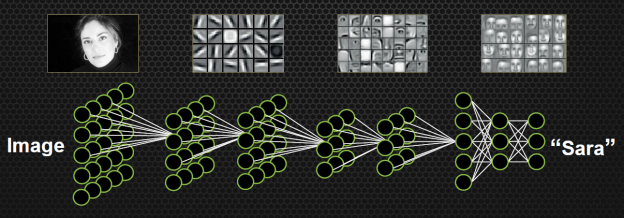

Figure 5. Example of a convolutional neural network (CNN) for facial recognition [29, 48] – Source: Author

CNNs and Emotion Detection

Convolutional neural networks (CNN), a type of FNN, were inspired by looking at the visual cortex of cats and monkeys, which contain locally-sensitive, orientation-selective neurons [26, 27, 28]. This type of structure has proven to work well for visual analysis. CNNs are trained feature filters, which work well at identifying features that are related spatially. Figure 5 illustrates this starting with an image on the left and moving to the right; this represents showing the first set of filters in the convolutional neural network [29]. The first filter is shown as the image directly to the right of the original image in Figure 5, highlighting the very basic edge/line detection. From there, additional filters are applied, each one adding a convolutional layer, which is more abstract than the previous (shapes, contours, objects). By the last layer, parts of a face can be identified, such as eyes, mouth and so on. The last layer in this CNN example are portions of the faces used to train the filters. Neural networks require large quantities of training data to ensure that generic features are identified and that overfitting does not occur.

Modern emotion detection methods utilize CNN for their utility in image identification [30, 31, 32, 33]. The CNN is used to classify an observed emotion on static images and relating them to the previously mentioned Action Units. As mentioned before, neural networks require large amounts of data and considerable computational power for training. However, once trained, a neural network classification is very efficient and typically exhibits higher accuracy when compared to other existing machine learning methods.

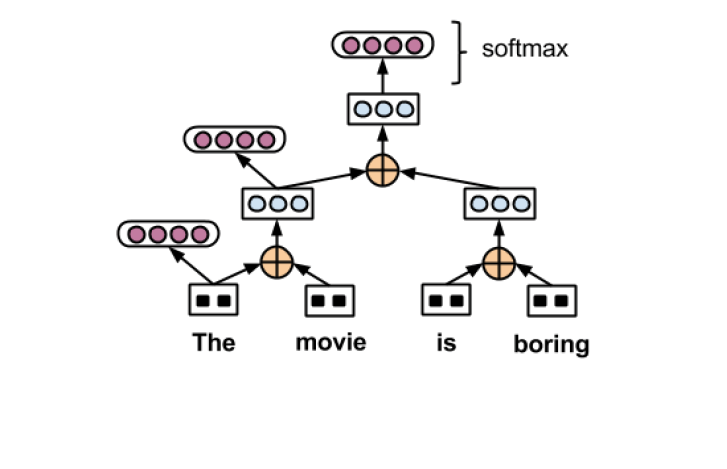

RNNs and Sentiment Analysis

Figure 6. This figure illustrates how an LSTM-RNN network can be constructed for sentiment analysis [34]. The yellow filled cross-hairs are the LSTM units, the softmax layer calculates the sentiment probability – Source: Author

Looking Ahead

RNNs and CNNs are currently the most common neural networks, but there are others and researchers are continuing to build deeper networks. Combining the benefits of a learned network found in CNNs with the ability to adapt and learn over time, has resulted in ANN, which are combinations of both architectures [36, 37, 38]. This is typically done by starting with a trained CNN and connecting that to a RNN, providing both spatial and temporal reasoning.

Generative Adversarial Networks (GANs)1, which combine multiple NNs in a very different way to provide surprisingly effective optimizations, are showing benefit in several areas.

In terms of human-AI teaming and applications to the cyber domain, there is even work moving ahead on helping humans understand the AI’s “point of view” to better solve problems and meet complex goals.2

Conclusion

With the development of more advanced ANNs and the integration of as machines become integrated into the process, knowledge management will become diverse with people, tools, and data. Improving human-machine interactions through emotional intelligence is crucial in developing trust between people and machines [39]. Advances to neural network algorithms, and better-quality computational capability are enabling better emotion and sentiment detection systems. This results in improving the human-machine interface as well as the machines ability to manage knowledge and interpret human interactions more effectively.

Companies from a variety of industries have been developing their own emotion detection systems or buying up other companies with experience in emotion detection [40, 41, 42, 43]. Most, if not all of these companies, are utilizing neural networks to understand emotions, and developing automated sentiment analysis for text, as well as voice analysis, to improve human-machine interactions [44]. In the cyber domain, there is much work going on in the area of combined human-machine teams that require emotion and sentiment understanding to stand up to the sometimes complex scenarios of cybersecurity.

Neural networks are still in their infancy and it will be a moment while before neural networks will be able to think like a human due to the limited complexity of the neural networks currently possible. Williams and Herrup [45] have looked at the total number of neurons in different species central nervous systems. They found that small organisms, like metazoans, typically had less than 300 neurons, while the common octopus and small mammals, like mice, have between 30 – 100 million neurons. Larger mammals, like whales and elephants, have more than 200 billion neurons. Healthy adult humans of normal intelligence have an estimated 100 billion neurons. Estimates for the current number of neural units used in ANN is in the millions for the most complex networks [46]. However, with the continued increase in computing power and introduction of new computational designs like neuromorphic commuting, closing the gap is just a matter of time [47, 21].

Endnotes

- This also should be a citation, I don’t want to try to do that… reference is “Generative Adversarial Nets” by Ian Goodfellow, arXiv:1406.2661v1 [stat.ML] 10 June 2014

- This could be a citation as well (probably should be for coherence with the rest of the article) – reference is “It Takes Two to Tango: Towards Theory of AI’s Mind” , Chandrasekaran, arXiv:1704.00717v2 [cs.CV] 2 Oct 2017

References

- B. Endicott-Popovsky, “The Probability of 1,” Journal of Cyber Security and Information Systems, vol. 3, pp. 18-19, 2015.

- M. Addleson, “A Knowledge Management (KM) Primer,” Journal of Cyber Security and Information Systems, vol. 2, pp. 2-12, 2014.

- K. R. Scherer, “Emotion as a multicomponent process: A model and some cross-cultural data.,” Review of Personality & Social Psychology, 1984.

- C. Potts, “Sentiment Symposium Tutorial,” 2011 (accessed December 7, 2016).

- K. R. Scherer, “What are emotions? And how can they be measured?,” Social science information, vol. 44, pp. 695-729, 2005.

- P. Ekman, Telling lies: Clues to deceit in the marketplace, politics, and marriage (revised edition), WW Norton & Company, 2009.

- C. D. Manning and H. Schütze, Foundations of statistical natural language processing, vol. 999, MIT Press, 1999.

- X. Hu, J. Tang, H. Gao and H. Liu, “Unsupervised sentiment analysis with emotional signals,” in Proceedings of the 22nd international conference on World Wide Web, 2013.

- B. Pang, L. Lee and S. Vaithyanathan, “Thumbs up?: sentiment classification using machine learning techniques,” in Proceedings of the ACL-02 conference on Empirical methods in natural language processing-Volume 10, 2002.

- B. Pang and L. Lee, “A sentimental education: Sentiment analysis using subjectivity summarization based on minimum cuts,” in Proceedings of the 42nd annual meeting on Association for Computational Linguistics, 2004.

- T. Mullen and R. Malouf, “A Preliminary Investigation into Sentiment Analysis of Informal Political Discourse.,” in AAAI Spring Symposium: Computational Approaches to Analyzing Weblogs, 2006.

- Z. Zeng, M. Pantic, G. I. Roisman and T. S. Huang, “A survey of affect recognition methods: Audio, visual, and spontaneous expressions,” IEEE transactions on pattern analysis and machine intelligence, vol. 31, pp. 39-58, 2009.

- R. Hartley and A. Zisserman, Multiple view geometry in computer vision, Cambridge university press, 2003.

- P. Ekman, W. V. Friesen and J. C. Hager, “Facial action coding system (FACS),” A technique for the measurement of facial action. Consulting, Palo Alto, vol. 22, 1978.

- G. Littlewort, J. Whitehill, T. Wu, I. Fasel, M. Frank, J. Movellan and M. Bartlett, “The computer expression recognition toolbox (CERT),” in Automatic Face & Gesture Recognition and Workshops (FG 2011), 2011 IEEE International Conference on, 2011.

- Y. Song, L.-P. Morency and R. Davis, “Learning a sparse codebook of facial and body microexpressions for emotion recognition,” in Proceedings of the 15th ACM on International conference on multimodal interaction, 2013.

- X. Li, X. Hong, A. Moilanen, X. Huang, T. Pfister, G. Zhao and M. Pietikäinen, “Reading hidden emotions: spontaneous micro-expression spotting and recognition,” arXiv preprint arXiv:1511.00423, 2015.

- W. S. McCulloch and W. Pitts, “A logical calculus of the ideas immanent in nervous activity,” The bulletin of mathematical biophysics, vol. 5, pp. 115-133, 1943.

- A. K. Jain, J. Mao and K. M. Mohiuddin, “Artificial neural networks: A tutorial,” Computer, vol. 29, pp. 31-44, 1996.

- N. Baumann and D. Pham-Dinh, “Biology of oligodendrocyte and myelin in the mammalian central nervous system,” Physiological reviews, vol. 81, pp. 871-927, 2001.

- T. Pfeil, “Exploring the potential of brain-inspired computing,” 2015.

- Z. C. Lipton, J. Berkowitz and C. Elkan, “A critical review of recurrent neural networks for sequence learning,” arXiv preprint arXiv: 1506.00019, 2015.

- F. A. Gers and E. Schmidhuber, “LSTM recurrent networks learn simple context-free and context-sensitive languages,” IEEE Transactions on Neural Networks, vol. 12, pp. 1333-1340, 2001.

- F. A. Gers, J. Schmidhuber and F. Cummins, “Learning to forget: Continual prediction with LSTM,” Neural computation, vol. 12, pp. 2451-2471, 2000.

- K. Greff, R. K. Srivastava, J. Koutník, B. R. Steunebrink and J. Schmidhuber, “LSTM: A search space odyssey,” IEEE transactions on neural networks and learning systems, 2016.

- Y. LeCun and Y. Bengio, “Convolutional networks for images, speech, and time series,” The handbook of brain theory and neural networks, vol. 3361, p. 1995, 1995.

- M. Matsugu, K. Mori, Y. Mitari and Y. Kaneda, “Subject independent facial expression recognition with robust face detection using a convolutional neural network,” Neural Networks, vol. 16, pp. 555-559, 2003.

- D. H. Hubel and T. N. Wiesel, “Receptive fields and functional architecture of monkey striate cortex,” The Journal of physiology, vol. 195, pp. 215-243, 1968.

- H. Lee, R. Grosse, R. Ranganath and A. Y. Ng, “Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations,” in Proceedings of the 26th annual international conference on machine learning, 2009.

- S. Albanie and A. Vedaldi, “Learning Grimaces by Watching TV,” arXiv preprint arXiv: 1610.02255, 2016.

- P. O. Glauner, “Deep convolutional neural networks for smile recognition,” arXiv preprint arXiv:1508.06535, 2015.

- S. Zafeiriou, A. Papaioannou, I. Kotsia, M. Nicolaou and G. Zhao, “Facial Affect “in-the-wild”: A survey and a new database,” in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, 2016.

- S. Zweig and L. Wolf, “InterpoNet, A brain inspired neural network for optical flow dense interpolation,” arXiv preprint arXiv:1611.09803, 2016.

- P. Le and W. Zuidema, “Compositional distributional semantics with long short term memory,” arXiv preprint arXiv:1503.02510, 2015.

- Y. Bengio, N. Boulanger-Lewandowski and R. Pascanu, “Advances in optimizing recurrent networks,” in 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, 2013.

- P. Khorrami, T. Le Paine, K. Brady, C. Dagli and T. S. Huang, “How deep neural networks can improve emotion recognition on video data,” in Image Processing (ICIP), 2016 IEEE International Conference, 2016.

- L. Deng and J. Platt, “Ensemble deep learning for speech recognition,” 2014.

- T. N. Sainath, O. Vinyals, A. Senior and H. Sak, “Convolutional, long short-term memory, fully connected deep neural networks,” in Acoustics, Speech and Signal Processing (ICASSP), 2015 IEEE International Conference, 2015.

- T. Heffernan, G. ONeill, T. Travaglione and M. Droulers, “Relationship marketing: The impact of emotional intelligence and trust on bank performance,” International Journal of bank marketing, vol. 26, pp. 183-199, 2008.

- Google Cloud Vision API.

- IANS, Facebook acquires emotion detection startup FacioMetrics, 2016 (accessed January 17, 2017).

- Microsoft Cognitive Services Emotion API.

- C. Metz, “Apple Buys AI Startup That Reads Emotions in Faces,” 2016 (accessed January 17, 2017).

- B. Doerrfeld, 20+ Emotion Recognition APIs That Will Leave You Impressed, and Concerned, 2015 (accessed January 17, 2017).

- R. W. Williams and K. Herrup, “The control of neuron number,” Annual review of neuroscience, vol. 11, pp. 423-453, 1988 (accessed February 27, 2017).

- Wikipedia, Artificial neural network — Wikipedia, The Free Encyclopedia, 2017.

- D. S. Modha, “Introducing a Brain-inspired Computer,” accessed January 19, 2017.

- L. Brown, “Accelerate Machine Learning with the cuDNN Deep Neural Network Library,” NVIDIA Accelerated Computing, 7 9 2014. [Online]. Available: https://devblogs.nvidia.com/parallelforall/accelerate-machine-learning-cudnn-deep-neural-network-library/. [Accessed 7 3 2017].