Modern defense platforms are at increasing risk of cyber-attack from sophisticated adversaries. These platforms do not currently provide the situational awareness necessary to identify when they are under cyber-attack, nor to detect that a constituent subsystem may be in a compromised state. Long-term improvements can be made to the security posture of these platforms by incorporating modern secure design best practices, but this is a time-consuming and costly task. Monitoring platform communication networks for malicious activity is an attractive solution for achieving improved cyber security on defense platforms in the near term. This article presents our research into the susceptibility of modern defense platforms to cyber-attack, and the suitability of platform-based intrusion detection systems in addressing this threat. We discuss risk factors contributing to cyber access, then describe a range of platform cyber-attack classes while considering the observables and indicators present on the embedded platform networks. Finally, we examine factors and considerations relating to implementation of a “Cyber Warning Receiver” solution approach for detection of such attacks.

The Threat is Real

For as long as weapons system platforms have been called upon to perform missions in contested spaces, the military has sought to protect the warfighter by equipping these platforms with survivability equipment. This equipment detects threats from across the various domains in which the platform operates, and alerts operators while taking appropriate response measures. As technology and connectivity of these platforms evolves and increasing sophistication is realized through automation, a new threat domain has emerged. This threat lurks in the dark, escaping detection by human eyes and ears, yet it has a clear potential for harm to the warfighter and to the mission. This is the cyber threat, and it is real.

Cyber-attacks become a credible threat if there is a reasonable expectation that a malicious actor could gain access to a defense platform, achieve a persistent malware presence, and subsequently trigger this malware to impart a damaging effect. In cyberspace, there are no concrete boundaries or borders. Cyber-attacks are not typically encumbered by range or timing. A malicious actor in a faraway land could achieve a latent presence and leverage it at a critical moment in the future to achieve their end goals. They could affect a single platform or an entire compromised squadron simultaneously.

Lessons from Industry

While there is a lack of openly documented cyber-attacks against Department of Defense (DoD) platforms, published examples against similar systems in other industries provide a compelling case for the feasibility of such attacks. We hear more and more about attacks against embedded systems and other smart devices. Attacks originate from threats that range from individual troublemakers to state-sponsored hacking groups. These attacks can be foul-mouthed hackers yelling at children via smart baby monitors [1], using SmartTVs as entrance points to home networks [2], entire automobiles being taken over remotely [3], or debilitating modification of industrial control processes [4].

In 2015, security researchers Dr. Charlie Miller and Chris Valasek were able to remotely access an unaltered SUV, controlling everything from the volume of the radio, to the transmission and steering systems. They first gained access to through a USB maintenance port, then eventually through its onboard cellular network. By traversing multiple subsystems, they ultimately controlled physical functions of the SUV from their hotel room while the vehicle was traveling on a highway.

In 2017, security consultants ARS were able to demonstrate the insertion of malicious code over a broadcasted TV signal. The transmitted code was able to exploit a vulnerability in the smart TV’s web browser, enabling root access for the attacker. If a broadcast station were compromised, this attack could be delivered to any vulnerable TV within the broadcast towers’ range.

As systems become more complex and gain more parts, supply chains for devices and systems become more spread out and global. This creates difficultly in validating the pedigree of 100% of the components on any one system. A 2017 Defense Science Board Task force on Cyber Supply Chain confirms the supply chain to be a real risk to DoD assets.

The examples above represent three distinct attack access vectors against embedded systems: supply chain compromise (microprocessor compromise), maintenance pathways (vehicle USB), and compromising data links (broadcasted malware in TV signal). Current trends in weapons system platform modernization suggest that these same vectors are also applicable to defense platforms.

Parallel Security Approaches

The trends of increasing computer automation and platform interconnectivity are here to stay, as they enable distinct tactical advantages. Platform security must improve to address the associated risks head on.

Two complimentary approaches are common when it comes to traditional IT security measures. These apply in the world of defense platforms as well. The first is host-based security, where the security of the individual boxes on a network are improved to achieve increased security for the system overall. With defense platforms, the diversity of subsystems on a given platform means there is usually no single silver bullet solution for host-based protection. Although important, this complicating factor makes application to legacy platforms time-consuming and costly. The second approach is network-based security, where communications between hosts on a network are monitored to detect and potentially intercept malicious activity. We explore this alternative to address near-term improvement in platform security.

Network Lockdown

Embedded networks form the backbone for communications between platform subsystems. They provide the critical link between interface equipment like displays and keypads, and the endpoint devices that actually implement mission essential control or measurement capabilities. The actions necessary to conduct a cyber-attack, and the resulting effects will, in the majority of cases, be observable via these networks.

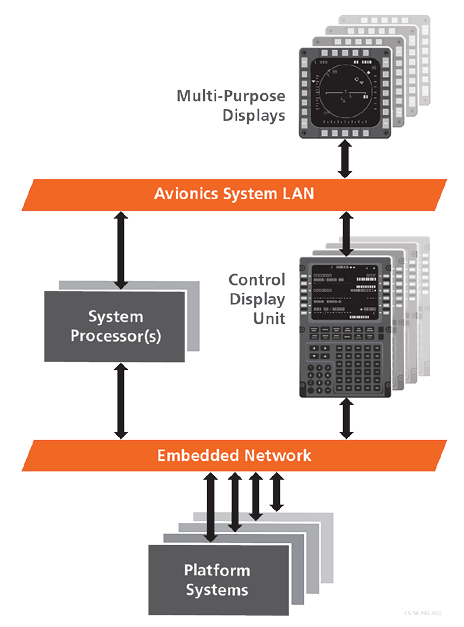

A common set of networks cover the vast majority of communications occurring on today’s defense platforms. In particular, the U.S. Army’s Common Avionics Architecture System (CAAS) depicted in Figure 1 relies heavily on Ethernet and MIL-STD-1553 (or fiber optic 1773) networks. Other common embedded interfaces include CANBus, ARINC 429, RS-232, RS-422, analog and discrete signals. A Cyber Warning Receiver, designed to look specifically for malicious activity on these networks can provide the broadly applicable solution necessary to achieve near-term game-changing platform security enhancement. A network focus enables rapid adaptation to various platforms, which would provide immense benefit to the cyber security posture of the overall fleet.

Figure 1.

Cyber Attacks and Embedded Networks

The breadth of published work on platform embedded network security is small in comparison to research for similar consumer, commercial, and industrial networks. Such networks are more openly accessible to security researchers for characterization. Our ongoing research has shown that many of the attack types conceived for other network types are also applicable to platform embedded networks. An overarching theme is that these networks do not provide any security features, such as authentication or encryption that would mitigate such misuse.

The attack types available depend on the specific foothold an attacker has achieved on a platform. In general, there are several positions an attacker might hold on a platform with respect to a system:

- Attacker presence on systems outside the network that leverage data sent or received via the network;

- Presence on a Remote Terminal / Slave / Receiving device connected to the network;

- Presence on a Bus Controller / Master/ Transmitting device for the network; and

- Multiple points of presence creating a combination of these states

Given this set of states, some of the attack types we’ve described and characterized are:

- Methods by which a compromised host could initiate new messages, remove existing messages, or intercept and modify data in transit between other terminals.

- Methods by which a compromised host could impersonate a different terminal or attempt to escalate its role in controlling the network.

- Methods by which any compromised host on the network could deny messaging between other terminals.

- Attacks in which basic rules and conventions of the data exchange protocol or application layer protocols in use are violated.

- Attacks in which a compromised host deliberately sends incorrect data to another host as part of the normal data exchange. This could include sensor data, control commands, system status or other information.

Consideration of possible attack types and characterization of their effects helps inform a robust design for a platform security detection system like a Cyber Warning Receiver.

Attack Observables

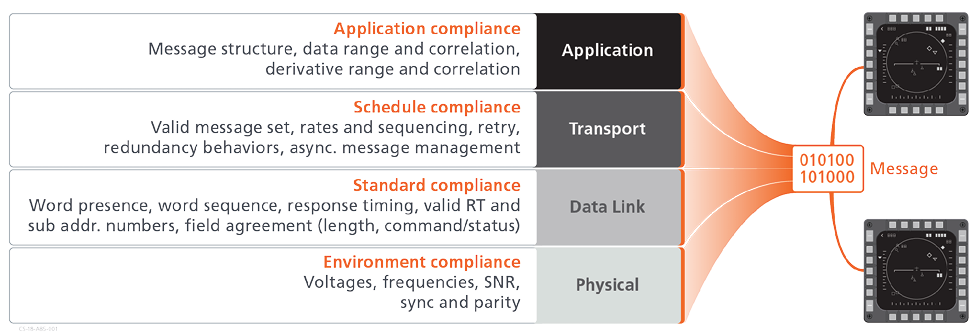

As the attacks described above take place on an embedded network, they produce side effects that are observable to a high-fidelity monitor. Embedded networks can be logically organized into several network layers. It is convenient to apply these layers when considering the various observables present. Although observables may vary by specific network type, Figure 2 provides a general summary of some common examples.

Figure 2 – Common Embedded Network Layers and Observables

The bottom layer is the physical layer, which contains observables relating to the fundamental electrical environment necessary for proper operation of the network. Certain attacks can cause disturbances at this level, especially in cases where misuse of the network causes message collisions.

The data link layer handles message addressing. At this level we can detect that only valid addresses and sub-addresses are present, and also that the expected message structure is intact, including allowed message types and expected word sequences for the hosts involved.

The transport layer handles details such as message delivery rates, schedules, and stateful transactions. At this layer we can verify that the system is using the set of messages expected to occur as part of the schedule, with the appropriate sequence and timing.

The application layer contains the core data of the message. The application layer format is often specific to the individual systems and their implementations, typically varying by vendor. Where data fields are specified or can be otherwise identified, a set of normal behaviors can be observed based on their values. For example, data may be known to have a limited range of values, to exhibit a known distribution, or to have a limited rate at which it can change. In other cases, multiple data fields might exhibit correlations, such as always moving together, or negating one another. Performance outside of these norms could be indicators of a cyber-attack.

Detecting Anomalies

A Cyber Warning Receiver operates by monitoring traffic and discovering anomalies in the behavior of these observations and measurements. The normal set of behaviors for each of the measurements must be characterized before deployment based on the protocol specifications and platform tailored information. Examples of this tailored information could include host addresses in use, message schedule in different operating modes, and observations from collections of real world data.

In order to detect attacks that have not before been observed in the wild or preconceived by defenders, we must leverage observable side effects that are agnostic to specific attack implementation details. A subtle attack may impact only a small subset of the available observables, within only one of the network layers, while more aggressive attacks may have broader impacts. A robust solution must monitor across all observables and layers.

Anomaly detection at the application layer presents a particular challenge. For example, detecting malicious adjustment of a reported sensor value requires extracting that value, tracking it over time, and comparing it to a normalcy model. Given the variable formats of the application layer, detection of this important attack class requires sophisticated anomaly detectors that:

- Scale to address the sheer volume of data relationships that would exist for all systems and messages across a complete defense platform.

- Manage the specifics of the application layer message formats and field locations for dozens of devices and hundreds of unique messages.

- Discover subtle or secondary correlations that might escape the intuitions of human cyber defense experts and therefore remain open to exploitation by malicious parties.

These limitations suggest the use of more automated techniques for anomaly detector creation.

Machine Learning as a Key Enabler

Advances in machine learning innately address the three challenges described above. Powerful parameter estimation and model structure detection techniques from machine learning are beneficial for system identification. Multiple examples of using observations to establish normal behavior models for complex systems exist. Activity unexpected by the normal behavior models is thus anomalous and becomes a data point for cyber-attack investigation.

Modern machine learning approaches incorporate feature engineering and credit assignment as key elements. Deep machine learning techniques, for example, combine input observations (e.g., values in each message data field) into more abstract aggregate features that, while no longer representing actual physical measurements, provide an excellent basis for making decisions (i.e., normal behavior or not). Machine learning automatically selects which learned features contribute to making such decisions and which are essentially irrelevant – they assign credit to the various features. These characteristics also obviate the challenge of identifying the most important data fields within the application layer. This is a huge benefit over the alternative of manual specification of data fields and their relative importance.

Machine learning enables reasoning over much larger volumes of data than would be possible for human experts alone. Anomaly detectors increase the visible range of subtle interactions and mutual patterns of behavior exhibited by disparate elements on an embedded network. These patterns may seem innocuous to cyber defense experts trying to envision attack vectors. However, these are exactly the oversights that inevitably get exploited. Finding instances of such subtle relationships has enhanced situational awareness in other domains

. Interestingly, insight into such patterns may also prove advantageous in system evaluation and trouble-shooting when non-attack anomalies surface.

Training for Continued Success

With machine learning comes a need for algorithm training, the process by which machine learning algorithms ingest relevant data, extract features, and build their representations of expected behavior. For a practical defense system, this training should not impose intensive requirements for data collection.

Suitable machine learning algorithms operate initially with bus data recorded during field trials and qualification testing and improve their performance upon acquisition of additional data. Once deployed, bus-recordings collected post mission would support incremental updates to training sets and learned behavior models. Distributing new models across platform instances at regular intervals enables all protected platforms to benefit continuously by learning from collective data. This provides a defense system that evolves with new threats and adapts to defeat them.

Measuring Malice

Not every anomaly means the platform is under attack. Systems are regularly entering and exiting new states and scenarios and experiencing abnormal conditions resulting from a range of incidental activities or failure modes. The key distinction between system glitches and cyber-attacks are the correlations that exist between observations, and the story they tell.

Any single cyber-attack step would generate a set of measurable side effects and artifacts. Multiple steps in sequence begin to form a picture of the current attacker presence and their objectives in an attack.

A data fusion system is the key element required to put these pieces together. Data fusion formulates the best possible estimate of the underlying system state based on observations, then determines the likelihood that anomalies are caused by an underlying failure, engagement in a scenario or operating mode not previously characterized, or an actual cyber-attack.

Building a Complete Picture

A final consideration in defining a Cyber Warning Receiver capability is the question of appropriate response. Among the options are event logging, operator notification, and active defense. Each has its own benefits and drawbacks.

Event logging during anomalous periods provides the capability to perform post-mission forensic data analysis. This low-impact activity is essential in order to provide better threat insights and preparedness for future engagements but offers limited protection for attacks as they are occurring.

Operator notification could help prompt a more immediate response but is not without risk. A notification should never distract a pilot or other key mission personnel unless the findings suggest an imminent survivability threat. Coordinated cyber and kinetic attacks in a combat situation would need to be prioritized to ensure a manageable feed of critical information to the operator. Providing too much information or generating excessive nuisance false alarms might be cause for an operator to disable a system, eliminating the protection and defeating the purpose.

Finally, the possibility of active defense is intriguing as it would allow immediate and automatic response for cyber-attacks, stopping them in their tracks. The risk with any active defense is that it could be tricked by attackers into providing an inappropriate response, in effect becoming a part of the attack itself. Design precautions would be necessary to ensure that attack suppression actions delivered by such an approach could not create consequences beyond what the original attack would have achieved by itself.

Conclusion

Modern weapons platforms continue to reach new heights of interconnectivity and software-defined automation. With these enhancements comes the need to address the increasing cyber security risks. Evidence from the commercial and industrial sectors suggests that many of the access vectors and attack methods observed there also apply to DoD platforms, with consequences that are potentially much more severe. Despite this reality, many modern weapons system platforms currently operate without sufficient means of providing detailed situational awareness into their cyber security state.

Embedded network monitoring enables a near-term capability to detect or prevent cyber-attacks that are a very real threat today. Through continuing research, we have characterized a wide range of embedded network-based attacks and established a corresponding set of observables. A Cyber Warning Receiver measures these observables over time and identifies anomalous or malicious activity. In addition to human-defined detection rules, it implements system behavior models derived using machine learning. The use of learned system behaviors enables deep inspection of messages traversing these interfaces to verify they are operating on schedule, that the expected correlations exist between various data fields, and that data ranges and rates of change are within their expected values.

When a cyber-attack occurs, the observations and anomalies that result are collected and examined using a data fusion process. This process estimates the underlying security state of the platform and tracks attacker actions. When critical systems are involved or a survivability risk is identified, a Cyber Warning Receiver can alert operators. Cyber warning capabilities form a key addition to the suite of platform survivability equipment, providing visibility into the cyber domain and keeping the warfighter safe in the face of this emerging advanced threat.

References

- Gross, Doug. 2013. Foul-mouthed hacker hijacks baby’s monitor. August 14. Accessed April 25, 2017. http://www.cnn.com/2013/08/14/tech/web/hacked-baby-monitor.

- Goodin, Dan. 2017. Smart TV hack embeds attack code into broadcast signal—no access required. March 31. Accessed April 25, 2017. https://arstechnica.com/security/2017/03/smart-tv-hack-embeds-attack-code-into-broadcast-signal-no-access-required/.

- Miller, Charlie, and Valasek, Chris. 2015. “Remote exploitation of an unaltered passenger vehicle.” Black Hat USA 2015.

- Falliere, Nicolas, O Murchu, Liam, and Chien, Eric. 2011. W32.Stuxnet Dossier Version 1.4. Malware Analysis, Symantec.

- Clements, Paul, and John K. Bergey. 2005. The U.S. Army’s Common Avionics Architecture System (CAAS) Product Line: A Case Study. Technical Report, Pittsburgh, PA: Carnegie Mellon Software Engineering Instutute.

- Ljung, Lennart, Hakan Hjalmarsson and Henrik Ohlsson, 2011. Four encounters with system identification. European Journal of Control, 5-6, 449-471; Pillonetto, Gianluigi. 2016. The interplay between system identification and machine learning. arXiv:1612.09158v1.

- Rhodes, B.J., Bomberger, N.A., Zandipour, M., Garagic, D., Stolzar, L.H., Dankert, J.R., Waxman, A.M., & Seibert, M. (2009). Automated activity pattern learning and monitoring provide decision support to supervisors of busy environments. Intelligent Decision Technologies, 3, 59–74; Rhodes, B.J., Bomberger, N.A., Zandipour, M., Stolzar, L.H., Garagic, D., Dankert, J.R., & Seibert, M. (2009). Anomaly detection & behavior prediction: Higher-level fusion based on computational neuroscientific principles. In N. Milisavljevic (Ed.), Sensor and Data Fusion (pp. 323–336). Croatia: In-Teh.

- Bengio, Y., Courville, A., & Vincent, P. (2013). Representation learning: A review and new perspectives. IEEE Trans. PAMI (Special issue: Learning Deep Architectures), 35, 1798–1828. doi:10.1109/tpami.2013.50; Hinton, G. E.; Salakhutdinov, R. R. (2006). Reducing the dimensionality of data with neural networks. Science, 313 (5786), 504–507. doi:10.1126/science.1127647.

- Zandipour, M., Rhodes, B.J., & Bomberger, N.A. (2008). Probabilistic prediction of vessel motion at multiple spatial scales for maritime situation awareness. In Proceedings of the 10th International Conference on Information Fusion, Cologne, Germany, June 30 – July 3, 2008; Dankert, J.R., Zandipour, M., Pioch, N., Biehl, B., Bussjager, R., Chong, C.Y., Schneider, M., Seibert, M., Zheng, S., & Rhodes, B.J. (2010). MIFFSSA: A multi-INT fusion and discovery approach for Counter-Space Situational Awareness. In Proceedings of 2010 Space Control Conference (SCC), Lexington, MA, USA, May 1–3, 2010.