Today’s cyber defenders find themselves at a disadvantage despite technological advances in cyber defense. Among the chief causes of this disadvantage is the asymmetry in a cyber conflict that favors the attacker.

On one side of the equation, defenders must improve detection and response times to avert or mitigate attacks. On the other side of the equation, defenders must slow the time to compromise by disrupting the attacker.

Under the assumption that increased cyberspace security is the main goal of cybersecurity policies, the current cybersecurity approach is not working. Cyberspace seems to have become less secure despite increasing expenditures on various aspects of cyber-security. The defenders continue to face significant challenges despite technological advances in security detection, prevention, and monitoring. Firewalls, vulnerability management, and intrusion prevention systems have proven ineffective against advanced threat actors. Attackers have successfully created attacks targeting vulnerabilities of which defenders are unaware, and the attackers have avoided detection through effective concealment. (Raymond, Conti, Cross, and Nowatkowski, 2014) Not only is detection failing, but the remediation time for cyber-attacks continues to increase. (Suby & Dickson, 2015)

The Attacker’s Advantage

Cyber attackers have the advantage because the attackers need to exploit a single vulnerability whereas the defender has the much costlier task of mitigating all vulnerabilities. Attackers can choose the time and place of the attack which further disadvantages the defenders. The ease by which an attacker can acquire and use an exploit coupled with the low likelihood of detection favors the attackers. (Zheng & Lewis, 2015) Once inside a network, individual actors in the cyber domain can have an asymmetric advantage and possess highly dangerous capabilities.

An attack must first be detected before a response is possible. The increasing sophistication of attacks makes the identification of both successful and unsuccessful attacks more difficult. The detection of the attack should occur as early in the cyber-attack lifecycle, or cyber kill chain, as possible to minimize the ramifications of the attack. Many sophisticated attacks, known as advanced persistent threats (APT), seek to establish persistence from which to operate and call out to a command-and-control system. (Byrne, 2015) The attacker can establish this persistence because organizations are often unaware of what software products are installed on each device.

Attackers who invest in an APT are highly motivated and will devote significant time to compromise a target to achieve a specific goal. These threat actors will map out multiple paths to reach the target and pivot their attack as necessary to reach the end goal. (Byrne, 2015) With the expanding complexity of systems, organizations present an increasingly large attack surface. The greater the attack surface, the more opportunities the attacker has to penetrate the perimeter and establish a persistence within the environment. Detection of APTs by either signature or anomaly detection methods is challenging because APTs are crafted for a particular target and often use unique attack vectors. (Virvilis, Serrano & Vanautgaerden, 2014)

The Defender’s Disadvantage

Organizations typically invest in point solutions to address cybersecurity issues. Such an approach results in organizations attempting to link together many disparate solutions into an architecture and framework unique to each organization. (Fonash & Schneck, 2015) Defenders must select and configure an increasing number of defenses of increasing complexity. Much of the configuring of defenses is conducted manually, and the defenders often do not have a full understanding of the integration points between the defenses or the associated risks with each defense. (Soule, Simidchieva, Yaman, Loyall, Atighetchi, Carvalho & Myers, 2015) Organizations may add defenses that provide little increase in security while introducing unacceptable costs and increasing the attack surface. New defenses may have adverse side effects when deployed in combination with existing defenses. Further, a fundamental limitation of reactive defense is that network connectivity automatically amplifies the effect of the attacks; however, reactive defenses are not so amplified.

Attackers can often reuse exploits due to a lack of effective, timely information sharing amongst defenders. If the community does not share information concerning cyber-attacks, the attackers can reuse the same attack methods on multiple organizations. Without information sharing, each organization is left to detect and analyze each attack. Organizations do not have the resources and knowledge to defend against the myriad of attacks when working independently.

Defenders also face a paradox of having too much data to deal with, while at the same time, missing critical data necessary to detect and analyze cyber attacks. (Brown, Gommers & Serrano, 2015) Cybersecurity operational environments are dynamic and can differ greatly between implementations. The vast volume of data and the wide variety of security devices challenge the analytical capabilities of human responders. (Lange, Kott, Ben-Asher, Mees, Baykal & Vidu, 2017) The rate of legitimate changes within large, complex enterprise systems makes the empirical validation and quantification of the attack surface prohibitively challenging. (Soule, Simidchieva, Yaman, Loyall, Atighetchi, Carvalho & Myers, 2015) Organizations require tools to manage the unlimited amounts of information they now collect from numerous sources. The knowledge tools must convert the information into actionable knowledge.

Closing the Gap

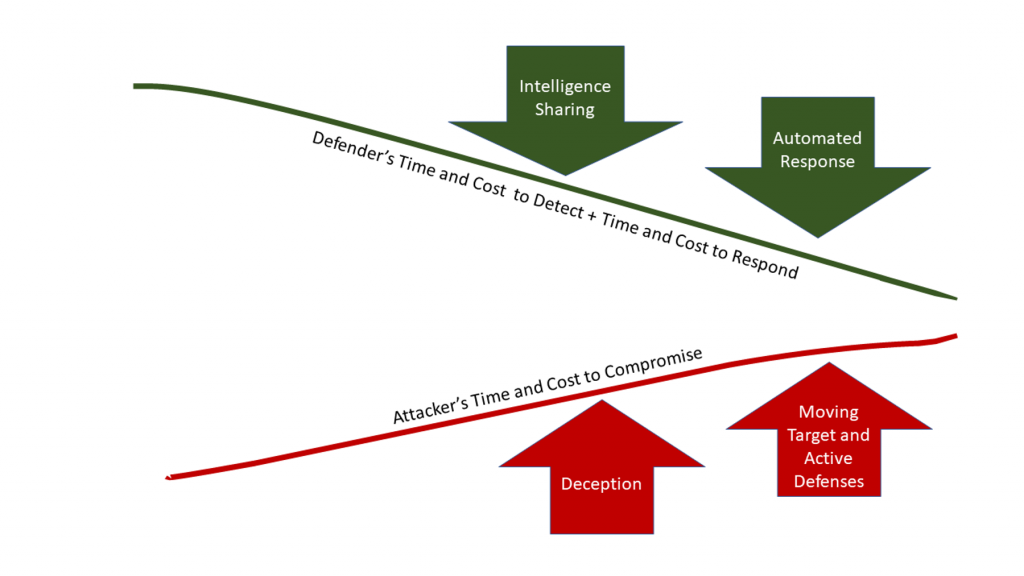

Cyber defenders must address both sides of the equation to narrow the gap between the attackers’ time to compromise and the defenders’ time to respond. An integrated approach involving security orchestration, automated response, information sharing, and advanced defense methods can reduce the competitive gap between attackers and defenders. Figure 1 depicts intelligence sharing, automated response, deception techniques, and advanced defensive methods working together to reduce the gap between the time to compromise and the time to respond.

4.1 Boyd’s OODA Loop

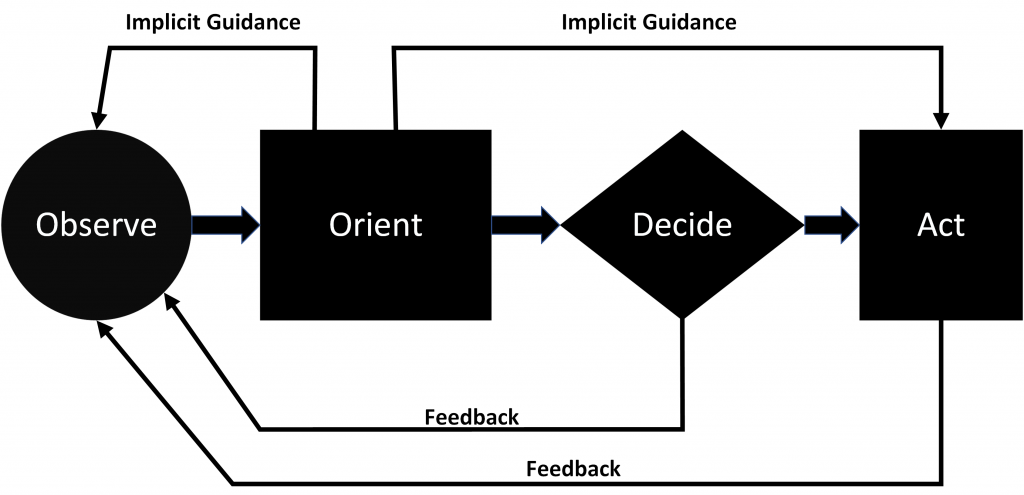

The observe-orient-decide-act (OODA) loop theory, developed by military strategist and Air Force pilot John Boyd, originally referred to gaining superiority in air combat. (Mepham, Ghinea, 2014) The concept behind the OODA loop was that completing an OODA loop quicker than the opponent prevented the opponent from gaining superiority in air combat [11]. Organizations can also apply the OODA loop to cyber-incident response. If the defender can respond quickly to the attacker’s actions, before the attacker can complete the OODA loop, the defender can gain cyber superiority. (Mepham, Ghinea, 2014)

In Boyd’s seminal presentation on air combat in which Boyd developed the OODA loop concept, Boyd suggested that to win it is necessary to get inside the adversary’s OODA loop. Interrupting the adversary’s OODA loop can cause confusion and disorder for the opponent. (Boyd, 1986) Changing the situation faster than the attacker could observe-orient-decide-act, lessening dwell time and giving the attacker less of a chance to “Act”. Also, by inserting oneself into the opponent’s OODA loop, a combatant can discover the strengths, weaknesses, tactics, and intent of the adversary. (Boyd, 1986)

The underlying goal of the OODA loop is to be faster than the enemy. This goal means that the cyber defender must streamline his command and control while also interfering with the attacker’s command and control. In applying the OODA loop theory to cybersecurity, intelligence sharing and automated response help speed the defender’s OODA loop. Whereas, deception and moving target defenses operate within the opponent’s OODA loop, slowing and confusing the attacker.

Operating in the attacker’s OODA loop using deceptions that disrupt the attacker’s orientation will compromise the attacker’s subsequent decisions and actions. (Almeshekah & Spafford, 2016 & Stech, Heckman & Strom, 2016) Deception-based defenses provide an advantage to the defenders as the deceptive information will affect the attacker’s observation and orientation stages of the OODA loop. (Almeshekah & Spafford, 2016 & Stech, Heckman & Strom, 2016) Defensive deceptions can help consume the attacker’s resources and disrupt decision-making by assigning additional tasks to the attacker. (Stech, Heckman & Strom, 2016) Also, by slowing the attacker, the defender gains more time to further orient, decide, and act. (Almeshekah & Spafford, 2016)

4.2 Automated Response

Current human-centered cyber defense practices cannot keep pace with the speed and pace of the threats targeting organizations. (Johns Hopkins Applied Physics Laboratory, 2016) There is a need to drastically increase the speed of both the detection of and response to cyber-attacks. Many risk-based decisions must be automated to facilitate this increase in detection and response speed. (Fonash & Schneck, 2015) Human involvement must become more oversight and less direct involvement. Increasing the speed and efficiency of detection and response requires rapid exchange of threat and incident detail among the automated defense systems. Such rapid exchange will require interoperability between systems at the technical, semantic and policy levels. (Fonash & Schneck, 2015)

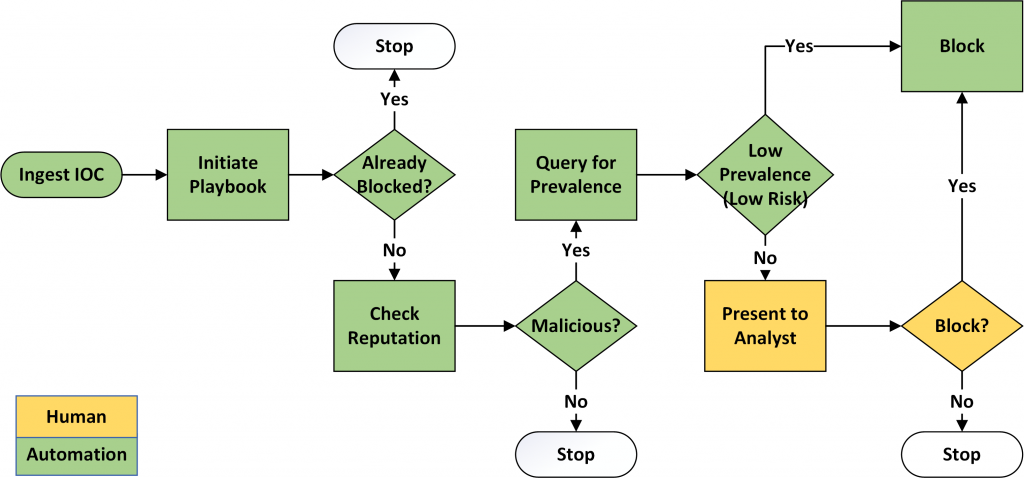

The Department of Homeland Security (DHS), the National Security Agency (NSA), and Johns Hopkins University Applied Physics Lab (JHU-APL) jointly developed the Integrated Adaptive Cyber Defense (IACD) framework in collaboration with private industry leaders. (Johns Hopkins Applied Physics Laboratory, 2016) The DHS and the NSA started the effort in 2014 to help address the continued malicious cyber-attacks on government and private industry. Current human-centered cyber defense practices cannot keep up with the increasing volume and speed of cyber threats. The IACD framework seeks to close this gap by automating cyberdefense tasks and increasing information sharing between enterprises. (Walton, Watson, Kosecki, Mok & Burger, 2018) The IACD framework uses automation to increase the speed of detection of and response to cyber threats and relies on information sharing to limit the reusability of exploits against the community. (Johns Hopkins Applied Physics Laboratory, 2016) By implementing the traditional OODA loop at speed and scale, IACD seeks to decrease cyber operation timelines from months to milliseconds. (Johns Hopkins Applied Physics Laboratory, 2016)

In 2018, the Financial Services Information Sharing and Analysis Center (FS-ISAC) and JHU APL partnered with three financial institutions to pilot the IACD framework. (Walton, Watson, Kosecki, Mok & Burger, 2018) The financial sector IACD pilot was designed to demonstrate the deployment of the framework into production environments to foster adoption within the sector. The integrated pilot sought to understand how intelligence enrichment could assist different organizations with varying policies and risk tolerances to determine what action to take. The pilot focused on the ingestion of threat intelligence from the FS-ISAC at three financial institutions, Huntington Bank, Mastercard, and Regions Bank. Each pilot financial institution implemented the IACD framework to take automated action on the threat intelligence. The results of the pilot showed that automation decreased the average time for the FS-ISAC to generate an indicator of compromise (IOC) from nearly six hours to one minute. (Frick, 2018) Also, the automated receipt, enrichment, and triage of IOCs by the financial institutions were reduced from an average of four hours to three minutes. In total, the automation reduced the average time to produce an IOC, disseminate an IOC, and initiate a response from approximately 10 hours to 4 minutes.

4.3 Intelligence Sharing

Reactive cyber defense strategies are insufficient to deal with the increasing persistence and agility of cyber attackers. (Zheng & Lewis, 2015) Information sharing amongst defenders can increase the efficiency in detecting and responding to cyber-attacks. When one organization detects an attack, collaborating organizations can use the information to take preventative measures. (Zheng & Lewis, 2015) Community sharing of attack intelligence fosters collective action. The number and sophistication of cyber-attacks require collective response action. Collective action requires that participants share and make use of information from attempted or successful attacks. For collective action to be effective, the other systems within the community must be informed of an attack before those systems are themselves attacked.

Intelligence sharing and automated response work together to reduce the defender’s cost and time. Information sharing can increase efficiency in detecting and responding to cyber-attacks. Collaborating organizations can use the information shared by one organization to take preventative measures to thwart attacks. (Zheng & Lewis, 2015) Collective action, fostered by community sharing of intelligence can act as an immune system for the collaborating organizations. As shown in the FS-ISAC pilot, security automation can increase the speed of response to an attack and the speed of proactively applying intelligence.

Maintaining a secure and resilient cyber ecosystem requires more than the sharing of attack information. Organizations must use the shared information to assess the effectiveness of courses of actions taken and develop, evaluate, and implement alternative courses of action as needed. Cyber intelligence aims to support decision making regarding the detection of, prevention of, and response to cyber attacks by developing reliable conclusions based on facts. (Brown, Gommers & Serrano, 2015) Organizations need to move beyond creating interoperable systems to share data and develop methods to generate value from the shared information.

4.4 Deception

Defenders should consider using deception as a critical component of their defensive posture since attackers have repeatedly demonstrated the ability to subvert traditional defenses. (Raymond, Conti, Cross, and Nowatkowski, 2014) Conventional defensive tactics focus on detecting and preventing the attacker’s actions while deception focuses on manipulating the attacker’s perceptions. (Almeshekah & Spafford, 2016 & Stech, Heckman & Strom, 2016 & DeFaveri & Moreira, 2018) Deception can manipulate the attacker’s thinking and cause the attacker to act in a way beneficial to the defender. (Rauti & Leppanen, 2017) The use of deception can also cause the attacker to expend resources and force the attacker to reveal the attacker’s techniques and capabilities. (DeFaveri & Moreira, 2018 & Rauti & Leppanen, 2017 & Dewar, 2017) In addition to detecting intruders, deception methods can provide an effective means of identifying an internal threat. (Fraunholz, Krohmer, Pohl & Schotten, 2018)

Cyberspace provides great potential for the practice of deception in cyber defense operations. (Raymond, Conti, Cross, and Nowatkowski, 2014) In the cyber realm, combatants can construct and move deceptive terrain with ease. Companies use the deceptive practice of honeypots and honeynets to divert attackers from valuable assets. A honeypot is designed as a decoy to entice attackers. In addition to slowing the attacker, honeypots allow defenders to gain knowledge of the attackers’ tactics, techniques, and procedures. (Olagunju & Samu, 2016 & Saud & Islam, 2015) Engaging the attacker early and maintaining deception with a honeypot allows the defender to collect and record details about the attacker’s attempts to compromise the system. Honeypots provide a versatile approach to network defenses. Defenders can deploy preventative and reactive honeypots in various network environments and situations. Also, honeypots can provide efficiencies over traditional intrusion detection systems. Honeypots generate far less logging data than traditional intrusion detection since the honeypots are not involved in normal operations. (Almeshekah & Spafford, 2016)

Deploying and maintaining honeypots requires expertise and ongoing maintenance to ensure the honeypot remains relevant. For a deception operation to be effective, it must present and maintain a plausible story to the attacker. (DeFaveri & Moreira, 2018) The honeypot must also be realistic enough that once it lures the attacker in, the honeypot continues to deceive the attacker. (Almeshekah & Spafford, 2016) Also, the honeypot must provide capabilities for the defender to detect and capture all actions taken by the attacker while in the honeypot.

Deception tactics are not limited to honeypots and honeynets. Defenders can deploy many types of deception, which can provide an early-warning system of possible intrusions. (Virvilis, Serrano & Vanautgaerden, 2014 & Rauti & Leppanen, 2017) Defenders can create a wide range of fake entities, including files, database entries, and passwords, which only a malicious attacker should access. (Rauti & Leppanen, 2017) Defensive systems monitor the fake entities and alert on any interactions with the bogus resources. Several of these methods are simple to implement and require no new technology. Organizations should consider combining techniques into a deception framework. The use of multiple techniques increases the effectiveness of the deception. For example, Fraunholz et al. developed a deception framework and a reference implementation that includes deceptive tokens for files, user accounts, database entries, and communication ports. (Fraunholz, Krohmer, Pohl & Schotten, 2018)

Like with all approaches to cybersecurity, the use of fake entities for deception comes with challenges. False alarms, or false positives, can occur when an employee interacts with a fake entity on the system. However, the interaction with honeytokens by an employee may indicate an insider threat. Perhaps the biggest challenge with fake entities is creating them. Fake entities must look realistic to the attacker to be effective. (Virvilis, Serrano & Vanautgaerden, 2014 & Rauti & Leppanen, 2017)

4.5 Moving Target Defenses

Moving target defenses (MTD) diversify the critical components of homogeneous environments. (Winterrose, Carter, Wagner & Streilien, 2014 & Ge, Yu, Shen, Chen, Pham, Blasch & Lu, 2014) By diversifying the attack surface presented to the attacker, the defender can increase the operational costs of the attackers. Dynamically changing the attack surface at run-time can reduce the attacker’s asymmetric advantage by complicating the attacker’s reconnaissance and exploitation efforts. Moving target defenses encompass emerging methods that make it more difficult for attackers to detect entry points into a system, reduce vulnerabilities, make remaining vulnerability exposures more transient, and decrease the effectiveness of attacks. (Soule, Simidchieva, Yaman, Loyall, Atighetchi, Carvalho & Myers, 2015)

Most systems operate with a static configuration, including the network, operating system, and application configurations. An attacker can probe these static systems to locate specific vulnerabilities for which the attacker has an exploit. The static configurations provide the attacker with time to conduct reconnaissance, develop a plan, and launch an attack. (Ge, Yu, Shen, Chen, Pham, Blasch & Lu, 2014) Defenders use MTDs to make computer systems more dynamic, thus increasing the difficulty and the cost of cyber-attacks. Moving target defenses change the static nature of the system in various ways including changing properties over time, introducing randomness into the internals of a system to make them less deterministic, and increasing the diversity in the computing environment. (Okhravi, Streilein & Bauer, 2016) By dynamically changing the environment, MTDs increase the effort on the part of the attacker while decreasing the attacker’s certainty of success. (Atighetchi, Benyo, Eskridge & Last, 2016)

Organizations may be reluctant to deploy MTD techniques because many MTD methods can potentially negatively impact the network’s mission more than they positively impact security. (Zaffarano, Taylor & Hamilton, 2015) Many MTD techniques can have negative performance impacts that may be prohibitive. (Okhravi, Streilein & Bauer, 2016) Also, organizations must take care to avoid implementing security solutions, including MTD methods, which add little value, increase operational costs, expand the attack surface, or create issues with existing security components. (Atighetchi, Benyo, Eskridge & Last, 2016)

The inability of many proposed MTD techniques to guarantee that varying the attack surface will enhance security effectiveness presents a major roadblock to the adoption of MTD techniques. (Hong & Kim, 2015) Measuring an MTD’s effectiveness, or the degree to which an MTD enhances security while minimizing defender effort is difficult. The evaluation of the effectiveness of MTD techniques is further complicated since the attackers need only to exploit the weakest link. (Okhravi, Streilein & Bauer, 2016)

4.6 Active Defenses

The active defense approach is based on countermeasures designed to detect and mitigate threats in real-time combined with the capability of taking offensive actions against threats both inside and outside of the defender’s network. (Dewar, 2014) Active defense emphasizes proactive countermeasures aimed at counteracting the immediate effects of incidents. Active cyber defense counters an attack by detecting then stopping malware or through concealment of target devices to counter espionage. Examples of active cyber defense methods include white worms, hacking back, address hopping, and honeypots. (Dewar, 2017)

White worms are like computer viruses; however, the purpose of a white worm is to locate and destroy malicious software, identify system intrusions, or to perform recovery procedures. (Dewar, 2014) Defenders deploy white worms within their network to seek out malicious intrusions, much like the human body deploys white blood cells to attack infections. White worms can be designed to destroy malicious software once discovered or to analyze the software to assist in the attribution and location of the perpetrators. (Dewar, 2017) However, defenders rarely deploy white worms operationally due to significant drawbacks. (Dewar, 2017) Defenders may have difficulty controlling white worms especially if the white worms are self-propagating. There is a risk that a white worm will escape the network in which it was deployed, possibly through an internet connection or removable storage. After escaping the network, the white worm may continue to replicate and cause unintended collateral damage to external networks. The potential costs associated with a white worm going rogue will often outweigh any potential benefit.

Active defense employs two classes of methods. Within the defender’s networks, active defenses detect and mitigate threats in real-time. (Dewar, 2014) With active defenses, the defender can also employ offensive countermeasures beyond the defender’s network. Defenders can, after identifying the source devices of an attack, take aggressive, offensive action to disable the source devices. Such aggressive measures operate outside the defender’s network. (Dewar, 2014) Many experts consider retaliatory actions or hacking back illegal as these methods require accessing systems of another organization without permission. (Heinl, 2014) Defenders who take offensive actions outside of the boundary of their network face legal implications. Further, the accessing of the command and control server of an attacker without permission may expose the company to criminal or civil actions. (Heinl, 2014) The ability to anonymize traffic on the Internet also complicates the use of offensive practices as the defender may not be able to attribute the incident to the perpetrator accurately. (Dewar, 2014)

Conclusion

The use of security automation and adaptive cyber defenses to combat cybercrime is an area of increasing research interest. Cyber attackers enjoy a significant advantage over the defenders in cyber conflict. The attackers’ advantage stems from multiple issues including the asymmetry of cyber conflict (Winterrose, Carter, Wagner & Streilien, 2014), the increased sophistication of cyber attacks (Bryne, 2015), the speed and number of attacks (Fonash & Schneck, 2015), and a shortage of cybersecurity talent. (Suby & Dickson, 2015 & Morgan, 2017) Current human-centered cyber defense practices cannot keep pace with the threats targeting organizations.

An integrated approach that speeds detection and response while slowing the attack is required. Security automation and intelligence sharing work together to reduce the defender’s cost and time. Information sharing can increase efficiency in detecting and responding to cyber-attacks. Collaborating organizations can use the information shared by one organization to take preventative measures to thwart attacks. Collective action, fostered by community sharing of intelligence can act as an immune system for the collaborating organizations. Security automation can increase the speed of response to an attack and the speed of proactively applying intelligence.

Cyber defenders can use many defensive methods to deter or delay attackers by operating within the attacker’s OODA loop. Advanced defense methods, including MTD, active defenses, and deception can raise the cost of an attack and slow the attack. Moving target defenses seek to increase the operational costs of the attackers by diversifying the attack surface presented to the attacker. (Winterrose, Carter, Wagner & Streilien, 2014) Active cyber defense countermeasures detect and mitigate threats in real-time and can take offensive actions both inside and outside of the defender’s network. (Dewar, 2014) Deception can accomplish two major objectives by disrupting the attacker’s OODA loop. First, deception can confuse and slow the attacker, causing the attacker to expend resources. Second, deception can reveal the attacker’s capabilities and techniques.

References

- Almeshekah, M. H., & Spafford, E. H. (2016). Cyber Security Deception. In S. Jajodia, V. Subrahmanian, V. Swarup, & C. Wang (Eds.), Cyber Deception (pp. 23-50). Switzerland: Springer. doi:10.1007/978-3-319-32699-3_2

- Atighetchi, M., Benyo, B., Eskridge, T. c., & Last, D. (2016). A decision engine for configuration of proactive defenses: Challenges and concepts. Resilience Week (pp. 8-12). Chicago, IL: IEEE. doi:10.1109/RWEEK.2016.7573299.

- Boyd, J. R. (1986). Patterns of conflict. Retrieved from http://dnipogo.org/john-r-boyd/

- Brown, S., Gommers, J., & Serrano, O. (2015). From cyber security information sharing to threat management. Workshop on Information Sharing and Collaborative Security (pp. 43-49). Denver, CO: ACM. doi:10.1145/2808128.2808133.

- Byrne, D. J. (2015). Cyber-attack methods, why they work on us, and what to do. AIAA SPACE 2015 Conference and Exposition (pp. 1-10). Pasadena, CA: American Institute of Aeronautics and Astronautics. doi:doi.org/10.2514/6.2015-4576.

- De Faveri, C., & Moreira, A. (2018). A SPL framework for adaptive deception-based defense. 51st Hawaii International Conference on System Sciences, (pp. 5542-5551). Honolulu, HI. doi:10.24251/HICSS.2018.691

- Dewar, R. S. (2014). The triptych of cyber security: A classification of active cyber defense. 6th International Conference on Cyber Conflict (pp. 7-22). Tallinn, Estonia: NATO CCD COE Publications. doi:10.1109/CYCON.2014.6916392

- Dewar, R. S. (2017). Active cyber defense: Cyber defense trend analysis. Zurich, Switzerland: ETH Zurich.

- Fonash, P., & Schneck, P. (2015, January). Cybersecurity: From months to milliseconds. Computer, 42-50. doi:10.1109/MC.2015.11.

- Fraunholz, D., Krohmer, D., Pohl, F., & Schotten, H. D. (2018). On the detection and handling of security incidents and perimeter breaches: A modular and flexible honeytoken based framework. IFIP International Conference on New Technologies, Mobility and Security. Paris, France: IEEE. doi:10.1109/NTMS.2018.8328709

- Frick, C. (2018). IACD & FS ISAC financial pilot results. Integrated Cyber October 2018 Conference (pp. 1-28). Laurel, MD: Johns Hopkins University Applied Physics Lab.

- Ge, L., Yu, W., Shen, D., Chen, G., Pham, K., Blasch, E., & Lu, C. (2014). Toward effectiveness and agility of network security situational awareness using moving target defense (MTD). SPIE – The International Society for Optical Engineering (pp. 1-9). San Diego, CA: International Society for Optical Engineering. doi:10.1117/12.2050782

- Heinl, C. H. (2014). Artificial (intelligent) agents and active cyber defence: policy implications. 6th International Conference on Cyber Conflict (pp. 53-66). Tallinn, Estonia: NATO CCD COE Publications. doi:10.1109/CYCON.2014.6916395.

- Hong, J. B., & Kim, D. S. (2015). Assessing the effectiveness of moving target defenses using security models. IEEE Transactions on Dependable and Secure Computing, 13(2), 163-177. doi:10.1109/TDSC.2015.2443790

- Johns Hopkins Applied Physics Laboratory. (2016). Integrated Adaptive Cyber Defense (IACD) Baseline Reference Architecture. Laurel, MD: Johns Hopkins Applied Physics Laboratory. Retrieved from https://secwww.jhuapl.edu.

- Lange, M., Kott, A., Ben-Asher, N., Mees, W., Baykal, N., Vidu, C. M., et al. (2017). Recommendations for model-driven paradigms for integrated approaches to cyber defense. Adelphi, MD: US Army Research Laboratory. Retrieved from https://www.arl.army.mil.

- Mepham, K., & Ghinea, G. (2014). Dynamic cyber-incident response. 6th International Conference on Cyber Conflict, 121-136. doi:10.1109/CYCON.2014.6916399.

- Morgan, S. (2017). Cybersecurity Jobs Report: 2017 Edition. Herjavec Group. Retrieved from https://www.herjavecgroup.com.

- Okhravi, H., Streilein, W. W., & Bauer, K. S. (2016). Moving target techniques: Leveraging uncertainty for cyber defense. Lincoln Laboratory Journal, vol. 22, pp. 100-109.

- Olagunju, A. O., & Samu, F. (2016). In search of effective honeypot and honeynet systems for real-time intrusion detection and prevention. Proceedings of the 5th Annual Conference on Research in Information Technology (pp. 41-46). Boston, MA: ACM. doi:10.1145/2978178.2978184

- Rauti, S., & Leppanen, V. (2017). A survey on fake entities as a method to detect and monitor malicious activity. Euromicro International Conference on Parallel, Distributed and Network-Based Processing (pp. 386-390). St. Petersburg, Russia: IEEE. doi:10.1109/PDP.2017.34

- Raymond, D., Conti, G., Cross, T., & Nowatkowski, M. (2014). Key terrain in cyberspace: Seeking the higher ground. 6th International Conference on Cyber Conflict (pp. 287-300). Tallinn, Estonia: NATO CCD COE Publications. doi:10.1109/CYCON.2014.6916409.

- Saud, Z., & Islam, M. H. (2015). Towards proactive detection of advanced persistent threat (APT) attacks using honeypots. Proceedings of the 8th International Conference on Security of Information and Networks (pp. 154-157). Sochi, Russia: ACM. doi:10.1145/2799979.2800042

- Soule, N., Simidchieva, B., Yaman, F., Loyall, J., Atighetchi, M., Carvalho, M., . . . Myers, D. F. (2015). Quantifying & Minimizing attack surfaces containing moving target defenses. Resilience Week. Philadelphia, PA: IEEE. doi:10.1109/RWEEK.2015.7287449.

- Stech, F. J., Heckman, K. E., & Strom, B. E. (2016). Integrating cyber-D&D into adversary modeling for active cyber defense. In S. Jajodia, V. S. Subrahmanian, V. Swarup, & C. Wang (Eds.), Cyber Deception (pp. 1-22). Switzerland: Springer. doi:10.1007/978-3-319-32699-3_1

- Suby, M., & Dickson, F. (2015). The 2015 (ISC)2 Global Information Security Workforce Study. Mountain View, CA: Frost & Sullivan. Retrieved from https://www.boozallen.com.

- Virvilis, N., Serrano, O. S., & Vanautgaerden, B. (2014). Changing the game: The art of deceiving sophisticated attackers. 6th International Conference on Cyber Conflict (pp. 87-97). Tallinn, Estonia: NATO CCD COE Publications. doi:10.1109/CYCON.2014.6916397.

- Walton, T., Watson, K., Kosecki, C., Mok, J., & Burger, W. (2018). Piloting expanded threat enrichment and automation via the FS-ISAC feed. May 2018 Integrated Cyber. Laurel, MD: Johns Hopkins Applied Physics Lab. Retrieved from https://www.iacdautomate.org/may-2018-integrated-cyber

- Winterrose, M. L., Carter, K. M., Wagner, N., & Streilien, W. W. (2014). Adaptive attacker strategy development against moving target cyber defenses. ModSim World (pp. 1-11). Hampton, VA: ModSim World.

- Zaffarano, K., Taylor, J., & Hamilton, S. (2015). A quantitative framework for moving target defense effectiveness evaluation. MTD’15 (pp. 3-10). Denver, CO: Association for Computing Machinery. doi:10.1145/2808475.2808476

- Zheng, D. E., & Lewis, J. A. (2015). Cyber Threat Information Sharing: Recommendations for Congress and the Administration. Washington, DC: Center for Strategic & International Studies. Retrieved from https://www.csis.org.